Prof. Shaohua Wan

University of Electronic Science and Technology of China, China

Open Access

Open Access

Article

Article ID: 450

by Kishore Kumar Akula, Monica Akula, Alexander Gegov

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

We previously developed two AI-based medical automatic image classification tools using a multi-layer fuzzy approach (MFA and MCM) to convert image-based abnormality into a quantity. However, there is currently limited research on using diagnostic image assessment tools to statistically predict the hazard due to the disease. The present study introduces a novel approach that addresses a substantial research gap in the identification of hazard or risk associated with a disease using an automatically quantified image-based abnormality. The method employed to ascertain hazard in an image-based quantified abnormality was the cox proportional hazard (PH) model, a unique tool in medical research for identifying hazard related to covariates. MFA was first used to quantify the abnormality in CT scan images, and hazard plots were utilized to visually represent the hazard risk over time. Hazards corresponding to image-based abnormality were then computed for the variables, ‘gender,’ ‘age,’ and ‘smoking-status’. This integrated framework potentially minimizes false negatives, identifies patients with the highest mortality risk and facilitates timely initiation of treatment. By utilizing pre-existing patient images, this method could reduce the considerable costs associated with public health research and clinical trials. Furthermore, understanding the hazard posed by widespread global diseases like COVID-19 aids medical researchers in prompt decision-making regarding treatment and preventive measures.

Open Access

Open Access

Article

Article ID: 441

by Abdulaziz Alhowaish Luluh, Muniasamy Anandhavalli

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

Deep learning (DL) techniques which implement deep neural networks became popular due to the increase of high-performance computing facilities. DL achieves higher power and flexibility due to its ability to process many features when it deals with unstructured data. DL algorithm passes the data through several layers; each layer is capable of extracting features progressively and passes it to the next layer. Initial layers extract low-level features, and succeeding layers combine features to form a complete representation. This research attempts to utilize DL techniques for identifying sounds. The development in DL models has extensively covered classification and verification of objects through images. However, there have not been any notable findings concerning identification and verification of the voice of an individual from different other individuals using DL techniques. Hence, the proposed research aims to develop DL techniques capable of isolating the voice of an individual from a group of other sounds and classify them based on the use of convolutional neural networks models AlexNet and ResNet, that are used in voice identification. We achieved the classification accuracy of ResNet and AlexNet model for the problem of voice identification is 97.2039 % and 65.95% respectively, in which ResNet model achieves the best result.

Open Access

Open Access

Article

Article ID: 440

by Irshad Ahmad Thukroo, Rumaan Bashir, Kaiser Javeed Giri

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

Spoken language identification is the process of confirming labels regarding the language of an audio slice regardless of various features such as length, ambiance, duration, topic or message, age, gender, region, emotions, etc. Language identification systems are of great significance in the domain of natural language processing, more specifically multi-lingual machine translation, language recognition, and automatic routing of voice calls to particular nodes speaking or knowing a particular language. In his paper, we are comparing results based on various cepstral and spectral feature techniques such as Mel-frequency Cepstral Coefficients (MFCC), Relative spectral-perceptual linear prediction coefficients (RASTA-PLP), and spectral features (roll-off, flatness, centroid, bandwidth, and contrast) in the process of spoken language identification using Recurrent Neural Network-Long Short Term Memory (RNN-LSTM) as a procedure of sequence learning. The system or model has been implemented in six different languages, which contain Ladakhi and the five official languages of Jammu and Kashmir (Union Territory). The dataset used in experimentation consists of TV audio recordings for Kashmiri, Urdu, Dogri, and Ladakhi languages. It also consists of standard corpora IIIT-H and VoxForge containing English and Hindi audio data. Pre-processing of the dataset is done by slicing different types of noise with the use of the Spectral Noise Gate (SNG) and then slicing into audio bursts of 5 seconds duration. The performance is evaluated using standard metrics like F1 score, recall, precision, and accuracy. The experimental results showed that using spectral features, MFCC and RASTA-PLP achieved an average accuracy of 76%, 83%, and 78%, respectively. Therefore, MFCC proved to be the most convenient feature to be exploited in language identification using a recurrent neural network long short-term memory classifier.

Open Access

Open Access

Article

Article ID: 535

by Yuchen Wang, Yin-Shan Lin, Ruixin Huang, Jinyin Wang, Sensen Liu

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

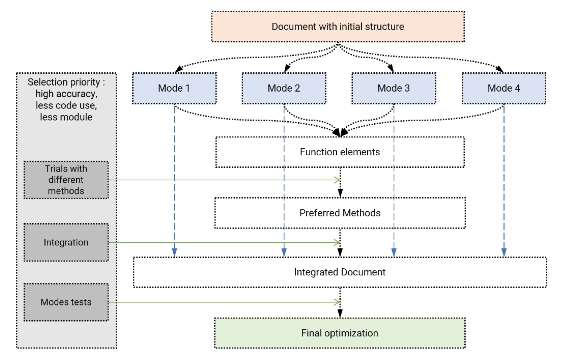

This paper begins with a theoretical exploration of the rise of large language models (LLMs) in Human-Computer Interaction (HCI), their impact on user experience (HX) and related challenges. It then discusses the benefits of Human-Centered Design (HCD) principles and the possibility of their application within LLMs, subsequently deriving six specific HCD guidelines for LLMs. Following this, a preliminary experiment is presented as an example to demonstrate how HCD principles can be employed to enhance user experience within GPT by using a single document input to GPT’s Knowledge base as new knowledge resource to control the interactions between GPT and users, aiming to meet the diverse needs of hypothetical software learners as much as possible. The experimental results demonstrate the effect of different elements’ forms and organizational methods in the document, as well as GPT’s relevant configurations, on the interaction effectiveness between GPT and software learners. A series of trials are conducted to explore better methods to realize text and image displaying, and jump action. Two template documents are compared in the aspects of the performances of the four interaction modes. Through continuous optimization, an improved version of the document was obtained to serve as a template for future use and research.

Open Access

Open Access

Article

Article ID: 1258

by Lambrini Seremeti, Ioannis Kougias

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

Society is rapidly changing into an implicitus one. The main factor leading to this societal transition is the integration of artificial intelligence (AI), influencing all aspects of anthropocentric legal order. The deep concern to safeguard fundamental human rights under unforeseeable circumstances threatening hypostasis, leads those who are involved in the legal praxis to reorganize the legal system to ensure its functional continuity. To this purpose, a reliable extra-legal tool, such as the doctrine of necessity, is proposed, to validate the issue of AI development that falls outside the purview of any legal process, though, being necessary for society prosperity.

Open Access

Open Access

Article

Article ID: 1388

by Yuanyuan Xu, Yin-Shan Lin, Xiaofan Zhou, Xinyang Shan

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

In recent years, advancements in human-computer interaction (HCI) have led to the emergence of emotion recognition technology as a crucial tool for enhancing user engagement and satisfaction. This study investigates the application of emotion recognition technology in real-time environments to monitor and respond to users’ emotional states, creating more personalized and intuitive interactions. The research employs convolutional neural networks (CNN) and long short-term memory networks (LSTM) to analyze facial expressions and voice emotions. The experimental design includes an experimental group that uses an emotion recognition system, which dynamically adjusts learning content based on detected emotional states, and a control group that uses a traditional online learning platform. The results show that real-time emotion monitoring and dynamic content adjustments significantly improve user experiences, with the experimental group demonstrating better engagement, learning outcomes, and overall satisfaction. Quantitative results indicate that the emotion recognition system reduced task completion time by 14.3%, lowered error rates by 50%, and increased user satisfaction by 18.4%. These findings highlight the potential of emotion recognition technology to enhance user experiences. However, challenges such as the complexity of multimodal data integration, real-time processing capabilities, and privacy and data security issues remain. Addressing these challenges is crucial for the successful implementation and widespread adoption of this technology. The paper concludes that emotion recognition technology, by providing personalized and adaptive interactions, holds significant promise for improving user experience and offers valuable insights for future research and practical applications.

Open Access

Open Access

Article

Article ID: 1332

by Konstantin M. Golubev

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

This paper focuses on a new concept based on modeling human intelligence for electronic knowledge publishing. It discusses the limitations of current publishing methods in solving real-life problems and proposes the need for a new type of publishing. It identifies two main streams of publishing: Emotional publishing and knowledge texts publishing, highlighting the challenges of the latter. The article evaluates various attempts in the field of Artificial Intelligence, such as expert systems and neural networks, in terms of knowledge transfer and problem-solving. It suggests that understanding the principles of human intelligence, as exemplified by the character Sherlock Holmes, can contribute to the development of the proposed “Intellect Modeling” concept. Overall, the article presents a comprehensive proposal that addresses the shortcomings of existing publishing models and offers a new approach that incorporates advancements in Artificial Intelligence and Knowledge Management.

Open Access

Open Access

Article

Article ID: 1257

by Hüseyin Bıçakçıoğlu, Sedat Soyupek, Onur Ertunç, Avni Görkem Özkan, Şehnaz Evirmler, Tekin Ahmet Serel

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

Rationale and objectives: Cribriform patterns are accepted as aggressive variants of prostate cancer. These adverse pathologies are closely associated with early biochemical recurrence, metastasis, castration resistance, and poor disease-related survival. A few publications exist to diagnose these two adverse pathologies with multiparametric magnetic resonance imaging (mpMRI). Most of these publications are retrospective and are not studies that have made a difference in diagnosing adverse pathology. It is also known that fusion biopsies taken from lesions detected in mpMRI are insufficient to detect these adverse pathologies. Our study aims to diagnose this adverse pathology using machine learning-based radiomics data from MR images. Materials and methods: A total of 88 patients who had pathology results indicating the presence of cribriform pattern and prostate adenocarcinoma underwent preoperative MRI examinations and radical prostatectomy. Manual slice-by-slice 3D volumetric segmentation was performed on all axial images. Data processing and machine learning analysis were conducted using Python 3.9.12 (Jupyter Notebook, Pycaret Library). Results: Two radiologists, SE and MAG, with 7 and 8 years of post-graduate experience, respectively, evaluated the images using the 3D-Slicer software without knowledge of the histopathological findings. One hundred seventeen radiomic tissue features were extracted from T1 weighted (T1W) and apparent diffusion coefficient (ADC) sequences for each patient. The interobserver agreement for these features was analyzed using the intraclass correlation coefficient (ICC). Features with excellent interobserver agreement (ICC > 0.90) were further analyzed for collinearity between predictors using Pearson’s correlation. Variables showing a very high correlation (r ≥ ±0.80) were disregarded. The selected features for T1W and ADC images were First-order maximum, First-order skewness, First-order 10th percentile for ADC, and Gray level size zone matrix, Large area low gray level emphasis for T1W.As a result of the classification of PyCaret, the three best models were found. A single model was obtained by blending these three models. AUC, accuracy, recall, precision, and F1 scores were 0.79, 0.77, 0.85, 0.82, and 0.83, respectively. Conclusion: ML-based MRI radiomics of prostate cancer can predict the cribriform pattern. This prognostic factor cannot be determined through qualitative radiological evaluation and may be overlooked in preoperative histopathological specimens.

Open Access

Open Access

Article

Article ID: 1378

by Zarif Bin Akhtar

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

The advent of artificial intelligence (AI) has significantly transformed various aspects of human life, particularly in information retrieval and assistance. This research presents a comprehensive evaluation of Gemini, previously known as Google Bard, a state-of-the-art AI chatbot developed by Google. Through a meticulous methodology encompassing both qualitative and quantitative approaches, this research aims to assess Gemini’s performance, usability, integration capabilities, ethical implications. Primary data collection methods, including user surveys and interviews, were utilized to gather towards the qualitative feedback on user experiences with Gemini, supplemented by secondary data analysis using tools such as Google Analytics to capture quantitative metrics. Performance evaluation involved benchmarking against other AI chatbots and technical analysis of Gemini’s architecture and training methods. User experience testing examined usability, engagement, and integration with Google Workspace and third-party services. Ethical considerations regarding data privacy, security, and biases in AI-generated content were also addressed, ensuring compliance with major regulations and promoting ethical AI practices. Acknowledging limitations and challenges inherent in the investigative exploration, data analysis was conducted using thematic and statistical methods to derive insights. The results and findings of this research offer valuable insights into the capabilities and limitations of Gemini, providing implications for future AI development, user interaction design, and ethical AI governance. By contributing to the ongoing discourse on AI advancements and their societal impact, this exploration facilitates informed decision-making and lays the groundwork for future research endeavors in the field of AI-driven conversational agents.

Open Access

Open Access

Article

Article ID: 1290

by Yifei Wang

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

The integration of artificial intelligence (AI) in financial decision-making processes has significantly enhanced the efficiency and scope of services in the finance sector. However, the rapid adoption of AI technologies raises complex ethical questions that need thorough examination. This paper explores the ethical challenges posed by AI in finance, including issues related to bias and fairness, transparency and explainability, accountability, and privacy. These challenges are scrutinized within the framework of current regulatory and ethical guidelines such as the General Data Protection Regulation (GDPR) and the Fair Lending Laws in the United States. Despite these frameworks, gaps remain that could potentially compromise the equity and integrity of financial services. The paper proposes enhancements to existing ethical frameworks and introduces new recommendations for ensuring that AI technologies foster ethical financial practices. By emphasizing a proactive approach to ethical considerations, this study aims to contribute to the ongoing discourse on maintaining trust and integrity in AI-driven financial decisions, ultimately proposing a pathway towards more robust and ethical AI applications in finance.

Open Access

Open Access

Review

Article ID: 1141

by Priya Pareshbhai Bhagwakar, Chirag Suryakant Thaker, Hetal A. Joshiara

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

Quantum computing (QC) and quantum machine learning (QML), two emerging technologies, have the potential to completely change how we approach solving difficult problems in physics and astronomy, among other fields. Potentially Hazardous Asteroids (PHAs) can produce a variety of damaging phenomena that put biodiversity and human life at serious risk. Although PHAs have been identified through the use of machine learning (ML) techniques, the current approaches have reached a point of saturation, signaling the need for additional innovation. This paper provides an in-depth examination of various machine learning (ML) and QML techniques for precisely identifying potentially hazardous asteroids. The study attempts to provide information to improve the efficiency and accuracy of asteroid categorization by combining QML techniques like deep learning with a variety of machine learning (ML) algorithms, such as Random Forest and support vector machines. The study highlights weaknesses in existing approaches, including feature selection and model assessment, and suggests directions for further investigation. The results highlight the significance of developing QML techniques to increase the prediction of asteroid hazards, consequently supporting enhanced risk assessment and space exploration efforts. Paper reviews might not be related because the study only looks at generic paper reviews.

Open Access

Open Access

Review

Article ID: 416

by Habib Hamam

Computing and Artificial Intelligence, Vol.2, No.1, 2024;

The integration of artificial intelligence (AI) has brought about a paradigm shift in the landscape of Neurosurgery and Neurology, revolutionizing various facets of healthcare. This article meticulously explores seven pivotal dimensions where AI has made a substantial impact, reshaping the contours of patient care, diagnostics, and treatment modalities. AI’s exceptional precision in deciphering intricate medical imaging data expedites accurate diagnoses of neurological conditions. Harnessing patient-specific data and genetic information, AI facilitates the formulation of highly personalized treatment plans, promising more efficacious therapeutic interventions. The deployment of AI-powered robotic systems in neurosurgical procedures not only ensures surgical precision but also introduces remote capabilities, mitigating the potential for human error. Machine learning models, a core component of AI, play a crucial role in predicting disease progression, optimizing resource allocation, and elevating the overall quality of patient care. Wearable devices integrated with AI provide continuous monitoring of neurological parameters, empowering early intervention strategies for chronic conditions. AI’s prowess extends to drug discovery by scrutinizing extensive datasets, offering the prospect of groundbreaking therapies for neurological disorders. The realm of patient engagement witnesses a transformative impact through AI-driven chatbots and virtual assistants, fostering increased adherence to treatment plans. Looking ahead, the horizon of AI in Neurosurgery and Neurology holds promises of heightened personalization, augmented decision-making, early intervention, and the emergence of innovative treatment modalities. This narrative is one of optimism and collaboration, depicting a synergistic partnership between AI and healthcare professionals to propel the field forward and significantly enhance the lives of individuals grappling with neurological challenges. This article provides an encompassing view of AI’s transformative influence in Neurosurgery and Neurology, highlighting its potential to redefine the landscape of patient care and outcomes.