Enhancing user experience in large language models through human-centered design: Integrating theoretical insights with an experimental study to meet diverse software learning needs with a single document knowledge base

Abstract

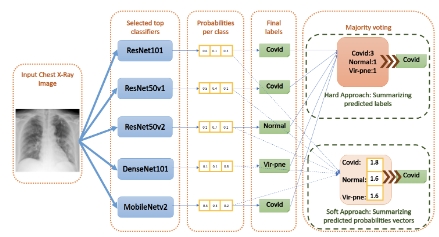

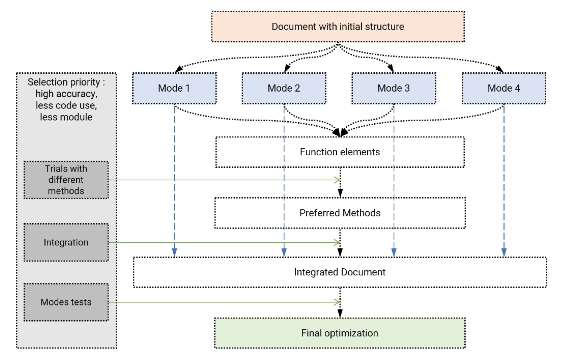

This paper begins with a theoretical exploration of the rise of large language models (LLMs) in Human-Computer Interaction (HCI), their impact on user experience (HX) and related challenges. It then discusses the benefits of Human-Centered Design (HCD) principles and the possibility of their application within LLMs, subsequently deriving six specific HCD guidelines for LLMs. Following this, a preliminary experiment is presented as an example to demonstrate how HCD principles can be employed to enhance user experience within GPT by using a single document input to GPT’s Knowledge base as new knowledge resource to control the interactions between GPT and users, aiming to meet the diverse needs of hypothetical software learners as much as possible. The experimental results demonstrate the effect of different elements’ forms and organizational methods in the document, as well as GPT’s relevant configurations, on the interaction effectiveness between GPT and software learners. A series of trials are conducted to explore better methods to realize text and image displaying, and jump action. Two template documents are compared in the aspects of the performances of the four interaction modes. Through continuous optimization, an improved version of the document was obtained to serve as a template for future use and research.

References

[1]Gokul A. LLMs and AI: Understanding Its Reach and Impact. Published online May 4, 2023. doi: 10.20944/preprints202305.0195.v1

[2]Liu J, Shen D, Zhang Y, et al. What Makes Good In-Context Examples for GPT-3? Published online 2021. doi: 10.48550/ARXIV.2101.06804

[3]Park TJ, Dhawan K, Koluguri N, et al. Enhancing Speaker Diarization with Large Language Models: A Contextual Beam Search Approach. Published online 2023. doi: 10.48550/ARXIV.2309.05248

[4]Thomas P, Spielman S, Craswell N, et al. Large language models can accurately predict searcher preferences. Published online 2023. doi: 10.48550/ARXIV.2309.10621

[5]Ortega Pedro A, Maini V, DeepMind Safety Team. Building safe artificial intelligence: specification, robustness, and assurance. Available online: https://deepmindsafetyresearch.medium.com/building-safe-artificial-intelligence-52f5f75058f1 (accessed on 2 January 2024).

[6]Xiong S, Payani A, Kompella R, et al. Large Language Models Can Learn Temporal Reasoning. Published online Feburary 20, 2024. doi: 10.48550/arXiv.2401.06853

[7]Ji J, Qiu T, Chen B, et al. Ai alignment: A comprehensive survey. arXiv. 2023; arXiv:2310.19852. doi: 10.48550/arXiv.2310.19852

[8]Weidinger L, Mellor J, Rauh M, et al. Ethical and social risks of harm from language models. arXiv. 2021; arXiv:2112.04359. doi: 10.48550/arXiv.2112.04359

[9]Guinness H. How does ChatGPT work? Available online: https://zapier.com/blog/how-does-chatgpt-work/ (accessed on 6 January 2024).

[10]Yan C, Qiu Y, Zhu Y. Predict Oil Production with LSTM Neural Network. Proceedings of the 9th International Conference on Computer Engineering and Networks. Publish online 2021. doi: 10.1007/978-981-15-3753-0_34

[11]Subramonyam H, Im J, Seifert C, et al. Solving Separation-of-Concerns Problems in Collaborative Design of Human-AI Systems through Leaky Abstractions. CHI Conference on Human Factors in Computing Systems. Published online April 29, 2022. doi: 10.1145/3491102.3517537

[12]Zhang P, Jaipersaud B, Ba J, et al. Classifying Course Discussion Board Questions using LLMs. Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V 2. Published online June 29, 2023. doi: 10.1145/3587103.3594202

[13]Don N. User centered system design—New perspectives on human-computer interaction. CRC Press; 1986.

[14]Geyer W, Weisz J, Pinhanez CS. What is human-centered AI? Available online: https://research.ibm.com/blog/what-is-human-centered-ai (accessed 12 January 2024).

[15]Farooqui T, Rana T, Jafari F. Impact of human-centered design process (HCDP) on software development process. In: 2019 2nd International Conference on Communication, Computing and Digital systems (C-CODE) 2019 Mar 6. doi: 10.1109/C-CODE.2019.8680978

[16]Gulliksen J, Göransson B, Boivie I, et al. Key principles for user-centred systems design. Behaviour and Information Technology. 2003; 22(6): 397-409. doi: 10.1080/01449290310001624329

[17]Jaimes A, Gatica-Perez D, Sebe N, et al. Guest Editors’ Introduction: Human-Centered Computing--Toward a Human Revolution. Computer. 2007; 40(5): 30-34. doi: 10.1109/mc.2007.169

[18]Mack K, McDonnell E, Potluri V, et al. Anticipate and Adjust: Cultivating Access in Human-Centered Methods. CHI Conference on Human Factors in Computing Systems. Published online April 29, 2022. doi: 10.1145/3491102.3501882

[19]Wang Y, Lin YS. Public participation in urban design with augmented reality technology based on indicator evaluation. Frontiers in Virtual Reality. 2023; 4. doi: 10.3389/frvir.2023.1071355

[20]Wu C. The Impact of Public Green Space Views on Indoor Thermal Perception and Environment Control Behavior of Residents - A Survey Study in Shanghai. European Journal of Sustainable Development. 2023; 12(3): 131. doi: 10.14207/ejsd.2023.v12n3p131

[21]Seffah A, Andreevskaia A. Empowering software engineers in human-centered design. 25th International Conference on Software Engineering, 2003 Proceedings. Published online 2003. doi: 10.1109/icse.2003.1201251

[22]Chao Yan. Predict Lightning Location and Movement with Atmospherical Electrical Field Instrument. Proceedings of the 10th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON). Publish online 2019. doi: 10.1109/IEMCON.2019.8936293

[23]Petridis S, Terry M, Cai CJ. PromptInfuser: Bringing User Interface Mock-ups to Life with Large Language Models. Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems. Published online April 19, 2023. doi: 10.1145/3544549.3585628

[24]Park J, Choi D. AudiLens: Configurable LLM-Generated Audiences for Public Speech Practice. Adjunct Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology. Published online October 29, 2023. doi: 10.1145/3586182.3625114

[25]Di Fede G, Rocchesso D, Dow SP, et al. The Idea Machine: LLM-based Expansion, Rewriting, Combination, and Suggestion of Ideas. Creativity and Cognition. Published online June 20, 2022. doi: 10.1145/3527927.3535197

[26]Korbak T, Shi K, Chen A, et al. Pretraining language models with human preferences. Available online: https://arxiv.org/abs/2302.08582 (accessed on 5 January 2024).

[27]Rastogi C, Tulio Ribeiro M, King N, et al. Supporting Human-AI Collaboration in Auditing LLMs with LLMs. Proceedings of the 2023 AAAI/ACM Conference on AI, Ethics, and Society. Published online August 8, 2023. doi: 10.1145/3600211.3604712

[28]Unity. Available online: https://docs.unity3d.com/Packages/com.unity.visualscripting@1.9/manual/ (accessed 11 January 2024).

[29]Weng Y, Wu J. Fortifying the Global Data Fortress: A Multidimensional Examination of Cyber Security Indexes and Data Protection Measures across 193 Nations. International Journal of Frontiers in Engineering Technology. Publish online 2024. doi: 10.25236/IJFET.2024.060203

[30]Wang C, Yang Y, Li R, et al. Proceedings of the 2024 International Conference on Image Processing and Computer Applications (IPCA 2024). Adapting LLMs for Efficient Context Processing through Soft Prompt Compression. Publish online April 7, 2024. doi: 10.48550/arXiv.2404.04997

Copyright (c) 2024 Yuchen Wang, Yin-Shan Lin, Ruixin Huang, Jinyin Wang, Sensen Liu

This work is licensed under a Creative Commons Attribution 4.0 International License.