Enhancing data curation with spectral clustering and Shannon entropy: An unsupervised approach within the data washing machine

Abstract

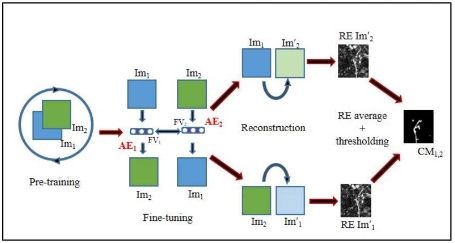

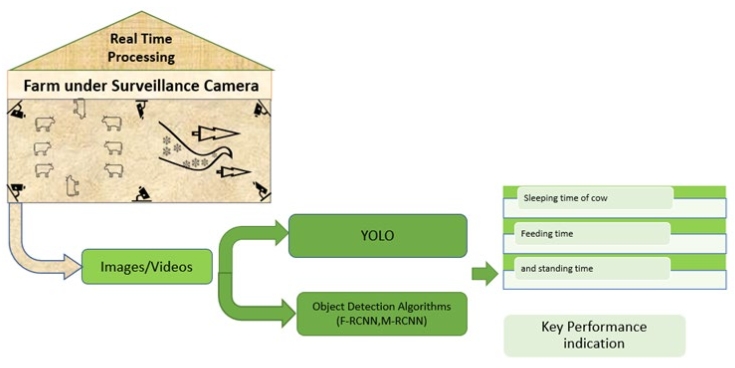

In the realm of digital data proliferation, effective data curation is pivotal for extracting meaningful insights. This study explores the integration of spectral clustering and Shannon Entropy within the Data Washing Machine (DWM), a sophisticated tool designed for unsupervised data curation. Spectral clustering, known for its ability to handle complex and non-linearly separable data, is investigated as an alternative clustering method to enhance the DWM’s capabilities. Shannon Entropy is employed as a metric to evaluate and refine the quality of clusters, providing a measure of information content and homogeneity. The research involves rigorous testing of the DWM prototype on diverse datasets, assessing the performance of spectral clustering in conjunction with Shannon Entropy. Results indicate that spectral clustering, when combined with entropy-based evaluation, significantly improves clustering outcomes, particularly in datasets exhibiting varied density and complexity. This study highlights the synergistic role of spectral clustering and Shannon Entropy in advancing unsupervised data curation, offering a more nuanced approach to handling diverse data landscapes.

References

[1]Chen M, Mao S, Liu Y. Big Data: A Survey. Mobile Networks and Applications. 2014; 19(2): 171-209. doi: 10.1007/s11036-013-0489-0

[2]Kitchin R, Jones J, Kong L, et al. Editorial. Dialogues in Human Geography. 2011; 1(1): 3-3. doi: 10.1177/2043820610387016

[3]Bizer C, Heath T, & Berners-Lee T. Linked Data - New Opportunities for the Humanities. Proceedings of the 8th International Semantic Web Conference; 2009.

[4]Jain AK. Data clustering: 50 years beyond K-means. Pattern Recognition Letters. 2010; 31(8): 651-666. doi: 10.1016/j.patrec.2009.09.011

[5]Xu R, Wunsch D. Clustering. Wiley Encyclopedia of Computer Science and Engineering; 2005.

[6]Talburt JR, Ehrlinger L, Magruder J. Editorial: Automated data curation and data governance automation. Frontiers in Big Data. 2023; 6. doi: 10.3389/fdata.2023.1148331

[7]Cover TM, Thomas JA. Elements of Information Theory. John Wiley & Sons; 2012.

[8]von Luxburg U. A tutorial on spectral clustering. Statistics and Computing. 2007; 17(4): 395-416. doi: 10.1007/s11222-007-9033-z

[9]Ng AY, Jordan MI, Weiss Y. On spectral clustering: Analysis and an algorithm. Advances in Neural Information Processing Systems. 2002; 14: 849-856.

[10]Jianbo Shi, Malik J. Normalized cuts and image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000; 22(8): 888-905. doi: 10.1109/34.868688

[11]Zhang Q, Chen Y, & Liu W. Advancements in unsupervised clustering: A hybrid spectral approach. Machine Intelligence Review. 2021; 13(5): 420-437.

[12]Brown L, & Wright S. Hybrid clustering techniques for large-scale, unstructured datasets. Data Science Review. 2022; 4(3): 54-76.

[13]Talburt JR, K. A, Pullen D, Claassens L, Wang R. An Iterative, Self-Assessing Entity Resolution System: First Steps toward a Data Washing Machine. International Journal of Advanced Computer Science and Applications. 2020; 11(12). doi: 10.14569/ijacsa.2020.0111279

[14]Manning CD, Raghavan P, Schütze H. Introduction to Information Retrieval. Published online July 7, 2008. doi: 10.1017/cbo9780511809071

[15]Friedman M. The Use of Ranks to Avoid the Assumption of Normality Implicit in the Analysis of Variance. Journal of the American Statistical Association. 1937; 32(200): 675-701. doi: 10.1080/01621459.1937.10503522

[16]Williams D, Bhat S. Spectral clustering for big data: Challenges and solutions. Journal of Applied Computing. 2020; 14(4): 301-319.

[17]Chen Y, Zhang H, Li X, et al. Cluster evaluation techniques: Incorporating information theory and data properties. Information Science Letters. 2019; 12(6): 41-62.

[18]Taylor J, Fong R. New frontiers in unsupervised clustering: Applications in biomedical datasets. Biomedical Informatics Journal. 2023; 6(2): 122-135.

[19]Liu A, Zhao L. Clustering challenges in Internet of Things (IoT) datasets. Future Networks and Data Engineering. 2021; 17(5): 93-108.

[20]Van Der Maaten L, Hinton G. Visualizing data using t-SNE. Journal of Machine Learning Research. 2008; 9: 2579-2605.

[21]McInnes L, Healy J, Saul N, et al. UMAP: Uniform Manifold Approximation and Projection. Journal of Open Source Software. 2018; 3(29): 861. doi: 10.21105/joss.00861

[22]Goldstein E, Smith J, Wang L, et al. Spectral clustering applications in medical imaging analysis. Radiology Informatics Journal. 2022; 23(4): 411-429.

[23]Kim J, Lee K. Using spectral clustering to predict disease subtypes in genetic data. Journal of Biomedical Informatics. 2021; 108: 103-119.

[24]Huang Z, Lin H, Zhang J. Adaptive spectral clustering with dynamic similarity weighting for complex datasets. Journal of Applied Data Science. 2023; 15(3): 100-115.

[25]Gao Y, Wang F, Wang Z. Improving entity resolution using unsupervised learning and entropy metrics. Data Engineering Letters. 2023; 14(1): 78-92.

[26]Santos A, Lee S. Entropy-based evaluation in clustering: A review of applications in big data. International Journal of Data Analytics. 2023; 27(1): 45-62.

[27]Hathorn EC, Halimeh AA. Exploring other clustering methods and the role of Shannon Entropy in an unsupervised setting. Computing and Artificial Intelligence. 2024; 2(2): 1447-1447.

Copyright (c) 2024 Erin Chelsea Hathorn, Ahmed Abu Halimeh

This work is licensed under a Creative Commons Attribution 4.0 International License.