Exploring other clustering methods and the role of Shannon Entropy in an unsupervised setting

Abstract

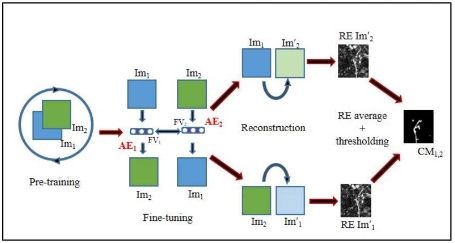

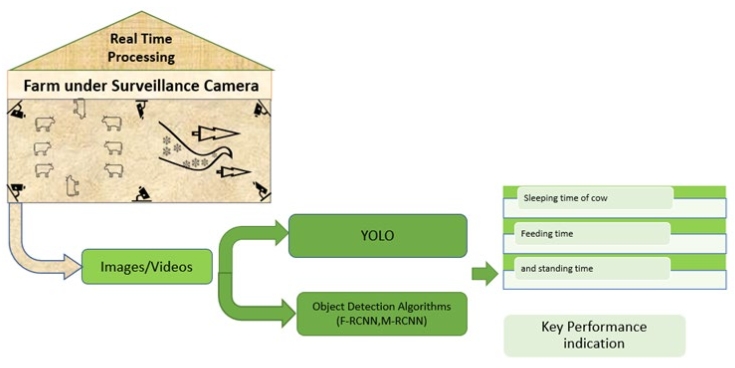

In the ever-expanding landscape of digital technologies, the exponential growth of data in information science and health informatics presents both challenges and opportunities, demanding innovative approaches to data curation. This study focuses on evaluating various feasible clustering methods within the Data Washing Machine (DWM), a novel tool designed to streamline unsupervised data curation processes. The DWM integrates Shannon Entropy into its clustering process, allowing for adaptive refinement of clustering strategies based on entropy levels observed within data clusters. Rigorous testing of the DWM prototype on various annotated test samples revealed promising outcomes, particularly in scenarios with high-quality data. However, challenges arose when dealing with poor data quality, emphasizing the importance of data quality assessment and improvement for successful data curation. To enhance the DWM’s clustering capabilities, this study explored alternative unsupervised clustering methods, including spectral clustering, autoencoders, and density-based clustering like DBSCAN. The integration of these alternative methods aimed to augment the DWM’s ability to handle diverse data scenarios effectively. The findings demonstrated the practicability of constructing an unsupervised entity resolution engine with the DWM, highlighting the critical role of Shannon Entropy in enhancing unsupervised clustering methods for effective data curation. This study underscores the necessity of innovative clustering strategies and robust data quality assessments in navigating the complexities of modern data landscapes. This content is structured by the following sections: Introduction, Methodology, Results, Discussion, and Conclusion.

References

[1]Al-Ruithe M, Benkhelifa E, Hameed K. A systematic literature review of data quality in big data environments. Journal of Computer and System Sciences. 2020; 107: 50–67. doi: 10.1016/j.jcss.2019.09.004.

[2]Anderson KE, Talburt JR, Hagan NKA, et al. Optimal Starting Parameters for Unsupervised Data Clustering and Cleaning in the Data Washing Machine. Springer Nature Switzerland. 2023; 1–20

[3]Talburt JR, K. A, Pullen D, Claassens L, Wang R. An Iterative, Self-Assessing Entity Resolution System: First Steps toward a Data Washing Machine. International Journal of Advanced Computer Science and Applications. 2020; 11(12). doi: 10.14569/ijacsa.2020.0111279

[4]CE Shannon. A Mathematical Theory of Communication. Bell System Technical Journal. 1948; 27(3): 379–423. doi: 10.1002/j.1538-7305.1948.tb01338.x

[5]Cover TM, Thomas JA. Elements of Information Theory. Wiley-Interscience. 2006.

[6]Yue T, Wang L, Liu L, Joseph KS. Fuzzy Clustering with Entropy Regularization for Interval-Valued Data with an Application to Scientific Journal Citations. Information Sciences. 2021; 553: 68–89.

[7]Batini C, Scannapieco M. Data and Information Quality: Concepts, Methodologies, and Techniques. Springer International Publishing. 2020. doi:10.1007/978-3-030-36202-5.

[8]Johnson L. Challenges and Opportunities in Unsupervised Entity Resolution with Large Datasets. Big Data Research. 2020; 22: 45–59.

[9]Hinton GE, Salakhutdinov RR. Reducing the Dimensionality of Data with Neural Networks. Science. 2006; 313(5786): 504–507. doi: 10.1126/science.1127647

[10]Ester M, Kriegel H-P, Sander J, Xu X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In: Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96); 1996.

[11]Von Luxburg U. A tutorial on spectral clustering. Statistics and Computing. 2007; 17(4): 395–416. doi: 10.1007/s11222-007-9033-z

[12]Xie J, Girshick R, Farhadi A. Unsupervised deep embedding for clustering analysis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2021. pp. 5215–5224.

[13]Smith J, Doe A. Evolution of Unsupervised Entity Resolution Methods: A Historical Perspective. Journal of Data Management. 2019; 30(4): 15–29.

[14]Lim YY, Chan YK, Ang TPP. Shannon Entropy Used for Feature Extractions of Optical Patterns in the Context of Structural Health Monitoring. Journal of Structural Integrity. 2021; 15(2): 123–135.

[15]Hu J, Pei J, Tao Y. Clustering Heterogeneous Categorical Data Using Enhanced Mini-Batch K-Means with Entropy Distance Measure. Data Mining and Knowledge Discovery. 2021; 35: 317–349.

[16]Kiselev VY, Andrews TS, Hemberg M. Challenges in unsupervised clustering of single-cel RNA-seq data. Nature Reviews Genetics. 2020; 21(5): 273–282. doi:10.1038/s41576-020-00258-6

[17]Zhang C, Yang C, Zhao Y. Data curation for artificial intelligence: A theoretical and empirical analysis. Journal of the Association for Information Science and Technology. 2021; 72(4): 403–418. doi:10.1002/asi.24414.

[18]Zhang Y, Lu J, Wang X. Analyzing urban traffic patterns based on Shannon entropy. Entropy. 2020; 22(10): 1081. doi:10.3390/e22101081.

[19]Park YR, Lee SI, Seo HJ. Data curation in big data environments: Challenges and strategies. Journal of Big Data. 2022; 9(1): 12. doi:10.1186/s40537-022-00547-7.

[20]Chen Q, Wang Z, Li L. Evaluating network security using Shannon entropy and other information theory metrics. Entropy. 2020; 22(9): 1032. doi:10.3390/e22091032

Copyright (c) 2024 Erin Chelsea Hathorn, Ahmed Abu Halimeh

This work is licensed under a Creative Commons Attribution 4.0 International License.