GestureID: Gesture-Based User Authentication on Smart Devices Using Acoustic Sensing

Abstract

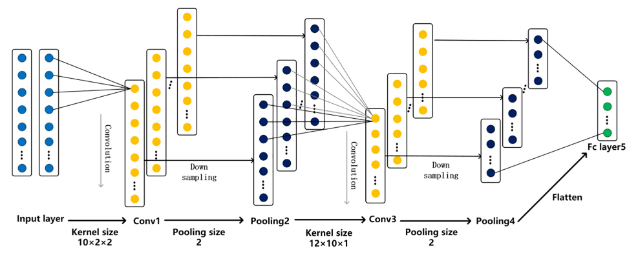

User authentication on smart devices is crucial to protecting user privacy and device security. Due to the development of emerging attacks, existing physiological feature-based authentication methods, such as fingerprint, iris, and face recognition are vulnerable to forgery and attacks. In this paper, GestureID, a system that utilizes acoustic sensing technology to distinguish hand features among users, is proposed. It involves using a speaker to send acoustic signals and a microphone to receive the echoes affected by the reflection of the hand movements of the users. To ensure system accuracy and effectively distinguish users’ gestures, a second-order differential-based phase extraction method is proposed. This method calculates the gradient of received signals to separate the effects of the user’s hand movements on the transmitted signal from the background noise. Then, the second-order differential phase and phase-dependent acceleration information are used as inputs to a Convolutional Neural Networks-Bidirectional Long Short-Term Memory (CNN-BiLSTM) model to model hand motion features. To decrease the time it takes to collect data for new user registration, a transfer learning method is used. This involves creating a user authentication model by utilizing a pre-trained gesture recognition model. As a result, accurate user authentication can be achieved without requiring extensive amounts of training data. Experiments demonstrate that GestureID can achieve 97.8% gesture recognition accuracy and 96.3% user authentication accuracy.

References

[1]Apple. Use touch ID on iPhone and iPad. https://support.apple.com/en-us/HT201371/. (accessed on 15/12/2023).

[2]Latman, N. S., Herb, E. (2013). A field study of the accuracy and reliability of a biometric iris recognition system. Science & Justice, 3(2), 98–102.

[3]Duc, N. M., Minh, B. Q. (2009). Your face is not your password face authentication bypassing lenovo-asus–toshiba. Black Hat Briefings, 4, 158.

[4]Apple. About face ID advanced technology. https://support.apple.com/en-us/HT208108/. (accessed on 15/12/2023).

[5]Gupta, S., Morris, D., Patel, S., Tan, D. (2012). Soundwave: Using the doppler effect to sense gestures. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1911–1914. New York, USA.

[6]Ruan, W., Sheng, Q. Z., Yang, L., Gu, T., Xu, P. et al. (2016). AudioGest: Enabling fine-grained hand gesture detection by decoding echo signal. Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, pp. 474–485. Heidelberg, Germany.

[7]Wang, Y., Shen, J., Zheng, Y. (2020). Push the limit of acoustic gesture recognition. IEEE Transactions on Mobile Computing, 21(5), 1798–1811.

[8]Wang, Y., Chen, Y., Bhuiyan, M. Z. A., Han, Y., Zhao, S. et al. (2018). Gait-based human identification using acoustic sensor and deep neural network. Future Generation Computer Systems, 86, 1228–1237.

[9]Yun, S., Chen, Y. C., Qiu, L. (2015). Turning a mobile device into a mouse in the air. Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, pp. 15–29. Florence, Italy.

[10]Mao, W., He, J., Zheng, H., Zhang, Z., Qiu, L. (2016). High-precision acoustic motion tracking. Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, pp. 491–492. New York, USA.

[11]Wang, W., Liu, A. X., Sun, K. (2016). Device-free gesture tracking using acoustic signals. Proceedings of the 22nd Annual International Conference on Mobile Computing and Networking, pp. 82–94. New York, USA.

[12]Zhang, Y., Chen, Y. C., Wang, H., Jin, X. (2021). CELIP: Ultrasonic-based lip reading with channel estimation approach for virtual reality systems. Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, pp. 580–585. Virtual, USA.

[13]Gao, Y., Jin, Y., Li, J., Choi, S., Jin, Z. (2020). EchoWhisper: Exploring an acoustic-based silent speech interface for smartphone users. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 4, no. 3, pp. 1–27.

[14]Zhang, Q., Wang, D., Zhao, R., Yu, Y. (2021). Soundlip: Enabling word and sentence-level lip interaction for smart devices. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 5, no. 1, pp. 1–28.

[15]Wang, T., Zhang, D., Zheng, Y., Gu, T., Zhou, X. et al. (2018). C-FMCW based contactless respiration detection using acoustic signal. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 1, no. 4, pp. 1–20.

[16]Wang, T., Zhang, D., Wang, L., Zheng, Y., Gu, T. et al. (2018). Contactless respiration monitoring using ultrasound signal with off-the-shelf audio devices. IEEE Internet of Things Journal, 6(2), 2959–2973.

[17]Zhang, L., Tan, S., Yang, J., Chen, Y. (2016). Voicelive: A phoneme localization based liveness detection for voice authentication on smartphones. Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, pp. 1080–1091. Vienna, Austria.

[18]Zhang, L., Tan, S., Yang, J. (2017). Hearing your voice is not enough: An articulatory gesture based liveness detection for voice authentication. Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, pp. 57–71. Dallas, USA.

[19]Zhou, B., Lohokare, J., Gao, R., Ye, F. (2018). EchoPrint: Two-factor authentication using acoustics and vision on smartphones. Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, pp. 321–336. New Delhi, India.

[20]Chen, H., Wang, W., Zhang, J., Zhang, Q. (2019). Echoface: Acoustic sensor-based media attack detection for face authentication. IEEE Internet of Things Journal, 7(3), 2152–2159.

[21]Lu, L., Yu, J., Chen, Y., Liu, H., Zhu, Y. et al. (2018). LipPass: Lip reading-based user authentication on smartphones leveraging acoustic signals. IEEE INFOCOM 2018-IEEE Conference on Computer Communications, pp. 1466–1474. Honolulu, USA.

[22]Tan, J., Wang, X., Nguyen, C. T., Shi, Y. (2018). SilentKey: A new authentication framework through ultrasonic-based lip reading. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 2, no. 1, pp. 1–18.

[23]Zhang, S., Das, A. (2021). Handlock: Enabling 2-fa for smart home voice assistants using inaudible acoustic signal. Proceedings of the 24th International Symposium on Research in Attacks, Intrusions and Defenses, pp. 251–265. San Sebastian, Spain.

[24]Shao, Y., Yang, T., Wang, H., Ma, J. (2020). AirSign: Smartphone authentication by signing in the air. Sensors, 21(1), 104. [PubMed]

[25]Hong, F., Wei, M., You, S., Feng, Y., Guo, Z. (2015). Waving authentication: Your smartphone authenticate you on motion gesture. Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, pp. 263–266. Seoul, Korea.

[26]Shahzad, M., Zhang, S. (2018). Augmenting user identification with WiFi based gesture recognition. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 2, no. 3, pp. 1–27.

[27]Kong, H., Lu, L., Yu, J., Chen, Y., Kong, L. et al. (2019). Fingerpass: Finger gesture-based continuous user authentication for smart homes using commodity wifi. Proceedings of the Twentieth ACM International Symposium on Mobile Ad Hoc Networking and Computing, pp. 201–210. Catania, Italy.

[28]Huang, A., Wang, D., Zhao, R., Zhang, Q. (2019). Au-Id: Automatic user identification and authentication through the motions captured from sequential human activities using rfid. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 3, no. 2, pp. 1–26.

[29]Chauhan, J., Hu, Y., Seneviratne, S., Misra, A., Seneviratne, A. et al. (2017). BreathPrint: Breathing acoustics-based user authentication. Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, pp. 278–291. New York, USA.

[30]Rodríguez Valiente, A., Trinidad, A., García Berrocal, J. R., Górriz, C., Ramírez Camacho, R. (2014). Extended high-frequency (9–20 kHz) audiometry reference thresholds in 645 healthy subjects. International Journal of Audiology, 53(8), 531–545.

[31]Selesnick, I. W., Burrus, C. S. (1998). Generalized digital Butterworth filter design. IEEE Transactions on Signal Processing, 46(6), 1688–1694.

[32]Tse, D., Viswanath, P. (2005). Fundamentals of wireless communication. UK: Cambridge University Press.

[33]Sardy, S., Tseng, P., Bruce, A. (2001). Robust wavelet denoising. IEEE Transactions on Signal Processing, 49(6), 1146–1152.

[34]Li, Q. (2020). Research and implementation on gesture recognition method based on high frequency acoustic of smartphone (Master Thesis). Northwest University, China.

[35]Park, D. S., Chan, W., Zhang, Y., Chiu, C. C., Zoph, B. et al. (2019). SpecAugment: A simple data augmentation method for automatic speech recognition. Interspeech 2019, pp. 2613–2617. Graz, Austria.

[36]Kiranyaz, S., Avci, O., Abdeljaber, O., Ince, T., Gabbouj, M. et al. (2021). 1D convolutional neural networks and applications: A survey. Mechanical Systems and Signal Processing, 151, 107398.

[37]Graves, A., Jaitly, N., Mohamed, A. R. (2013). Hybrid speech recognition with deep bidirectional LSTM. 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, pp. 273–278. Olomouc, Czech Republic.

[38]Santurkar, S., Tsipras, D., Ilyas, A., Madry, A. (2018). How does batch normalization help optimization?. Processings of the Advances in Neural Information Processing Systems, pp. 2483–2493. Montreal, Canada.

[39]Pan, S. J., Yang, Q. (2009). A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering, 22(10), 1345–1359.

[40]Yosinski, J., Clune, J., Bengio, Y., Lipson, H. (2014). How transferable are features in deep neural networks?. Proceedings of the Advances in Neural Information Processing Systems, pp. 3320–3328. Montreal, USA.

[41]Chollet, F. Keras: the python deep learning api. https://keras.io/. (accessed on 15/12/2023).

[42]Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A. et al. (2016). TensorFlow: A system for large-scale machine learning. Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI’ 16), pp. 265–283. Savannah, GA, USA.

[43]

[44]